This is the first algorithm in this series of machine learning👾 blogs!!! This series involves various concepts of ML, AI, Reinforcement Learning as well as coding💻 stuff!!! please show some love❤️(It's always a motivation, you know!!🤪).

The first algorithm that we'll breakdown🔩 is naive Bayes classifier whereas this blog only involves basic theory (next coming blogs will involve the code and mechanics⚙️ behind).

A classifier is a machine learning model that is used to discriminate different objects based on certain features. A Naive Bayes classifier is a probabilistic machine learning model that’s used for the classification task. The crux of the classifier is based on the Bayes theorem.

Example: Let us take an example to get some better intuition. Consider the problem of playing golf🏌. The dataset is represented as below⏬.

We classify whether the day is suitable for playing golf, given the features of the day. The columns represent these features and the rows represent individual entries. If we take the first row of the dataset, we can observe that is not suitable for playing golf if the outlook is rainy, the temperature is hot🌞, humidity is high and it is not windy🌥. We make two assumptions here, one as stated above we consider that these predictors are independent. That is, if the temperature is hot, it does not necessarily mean that the humidity is high. Another assumption made here is that all the predictors have an equal effect on the outcome. That is, the day being windy does not have more importance in deciding to play golf or not.

According to this example, Bayes theorem can be rewritten as:

Types of Naive Bayes Classifier:

Multinomial Naive Bayes:

- This is mostly used for document classification problem, i.e whether a document belongs to the category of sports, politics, technology, etc. The features/predictors used by the classifier are the frequency of the words present in the document.

10

Bernoulli Naive Bayes:

- This is similar to the multinomial naive Bayes but the predictors are boolean variables. The parameters that we use to predict the class variable take up only values yes or no, for example, if a word occurs in the text or not.

Gaussian Naive Bayes:

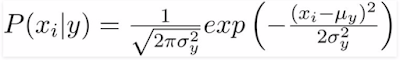

- When the predictors take up a continuous value and are not discrete, we assume that these values are sampled from a Gaussian distribution.

Gaussian Distribution(Normal Distribution)

Since the way the values are present in the dataset changes, the formula for conditional probability changes to,

Hope you love this one😀!! This is my first blog in this machine learning series which contains a series of other algorithms like Decision tree, K-NN, etc. And, all the algorithms are going to breakdown from scratch🧘♂️ without using many libraries(to understand what's going behind them!!!)please show some love on twitter(https://twitter.com/gauravs66336054 )and other social my social media page!!(Adding soon)❣️

show your love and support!!

ReplyDeleteDude waiting for this series!!👍😶

ReplyDeleteGreat Explanation!!!!😊😊

ReplyDelete